2024-03-06 13:47:43

Secret Agent Cat!

/

Beware of pretty faces that you find

A pretty face can hide an evil mind

Ah, be careful what you say

Or you'll give yourself away

Odds are he won't live to see tomorrow

Secret agent Cat, secret agent Cat

They've given you a number

And taken away your name

(Johnny Rivers)

/

#AI

2024-03-05 12:40:45

Filing: GB News owner All Perspectives has been forced to provide a further £41M in funding to cover operating costs, and is now owed £83.8M by GB News (Daniel Thomas/Financial Times)

https://t.co/UVJqiksHKE

2024-03-02 01:33:30

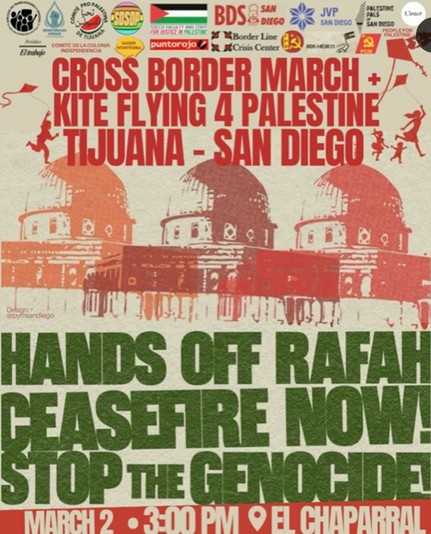

Cross Border March

Kite flying 4 Palestine

Tijuana -- San Diego

Hands off Rafah

Ceasefire Now

Stop the Genocide

March 2 3:00 PM El Chaparral

#FreePalestine #Protest #Tijuana

2024-03-06 13:47:43

Secret Agent Cat!

/

Beware of pretty faces that you find

A pretty face can hide an evil mind

Ah, be careful what you say

Or you'll give yourself away

Odds are he won't live to see tomorrow

Secret agent Cat, secret agent Cat

They've given you a number

And taken away your name

(Johnny Rivers)

/

#AI

2024-04-05 09:27:35

Ein paar Tage im wilden Westen der Toskana unterwegs per Eisenbahn. Perfekter Ausgangspunkt war das Hotel Gran Tourismo am Bahnhof Grosseto dessen Rezeption schon wie eine Empfangshalle wirkt. Von dort fahren Züge bis Rom, Pisa, nach Tarquinia, Follonica, Siena oder Paganico oder der Bus an den Strand. Auch Regionalzüge, für die es ein günstiges 3- oder 5-Tagesticket gibt. Zurück fahren wir über La Spezia, Genua und ab Mailand per Nacht-Flixbus nach Bregenz. Der Weg ist unser Ziel.

2024-02-29 12:26:03

2024-03-04 19:57:07

Far be it from me to tell a country how to genocide, but it seems like a bad idea to indiscriminately bomb a place that has hostages you want returned.

https://www.theguardian.com/world/2024/mar/03/we-were-const…

2024-05-01 06:49:12

Better & Faster Large Language Models via Multi-token Prediction

Fabian Gloeckle, Badr Youbi Idrissi, Baptiste Rozi\`ere, David Lopez-Paz, Gabriel Synnaeve

https://arxiv.org/abs/2404.19737 https://arxiv.org/pdf/2404.19737

arXiv:2404.19737v1 Announce Type: new

Abstract: Large language models such as GPT and Llama are trained with a next-token prediction loss. In this work, we suggest that training language models to predict multiple future tokens at once results in higher sample efficiency. More specifically, at each position in the training corpus, we ask the model to predict the following n tokens using n independent output heads, operating on top of a shared model trunk. Considering multi-token prediction as an auxiliary training task, we measure improved downstream capabilities with no overhead in training time for both code and natural language models. The method is increasingly useful for larger model sizes, and keeps its appeal when training for multiple epochs. Gains are especially pronounced on generative benchmarks like coding, where our models consistently outperform strong baselines by several percentage points. Our 13B parameter models solves 12 % more problems on HumanEval and 17 % more on MBPP than comparable next-token models. Experiments on small algorithmic tasks demonstrate that multi-token prediction is favorable for the development of induction heads and algorithmic reasoning capabilities. As an additional benefit, models trained with 4-token prediction are up to 3 times faster at inference, even with large batch sizes.

2024-02-19 18:55:39

Filing: the company behind MariaDB, which raised ~$230M before its 2022 IPO, may be taken private by K1 in a $37M deal, far below its $672M Series D valuation (Paul Sawers/TechCrunch)

https://techcrunch.com/2024/02/19/stru

2024-03-01 18:19:55

Overhead (not really):

"A shame... [[Moloch]] has taken them."